'A social problem': Growing trend of AI deepfakes and misinformation spurs fears as technology advances

(Photo: Facebook)

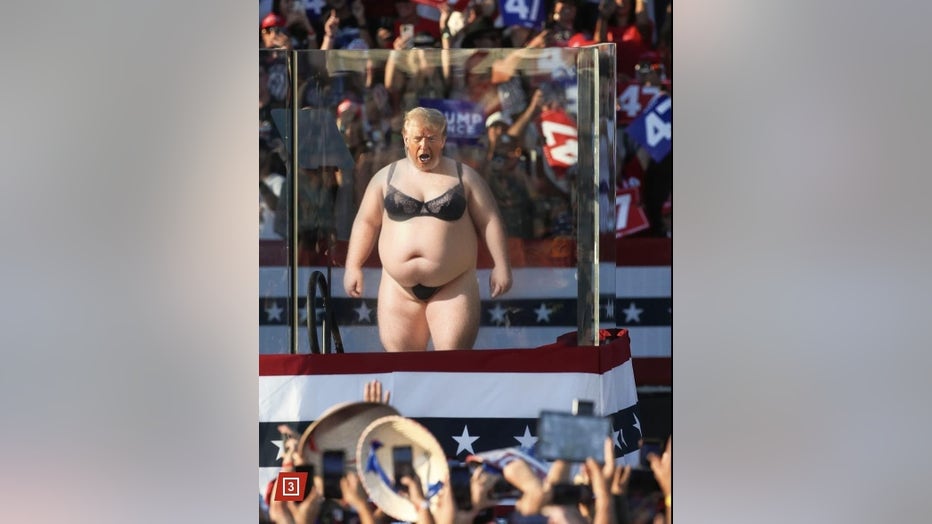

DETROIT (FOX 2) - Donald Trump clad in a lacy bra and underwear, Barack Obama feeding a blindfolded Joe Biden an ice cream cone, Kamala Harris joining Trump for his shift at McDonald’s. These images all have something in common – they’re not real, and they can be found on social media right now.

With the rise in artificial intelligence comes an increase in fake images and videos making their rounds on social media. They range from weird and obviously fake to realistic and potentially misleading.

(Photo: Facebook)

In an attempt to get ahead of the AI curve before it had the chance to potentially impact elections, Michigan Rep. Penelope Tsernoglou sponsored legislation to require a disclosure when AI has been used to create a political ad. When discussing her bills on the House floor, she had some help from President Biden. Or did she?

"Hi Representative Tsernoglou. This is your buddy, Joe. I really like your bill that requires disclaimers on political ads that use artificial intelligence," Biden seemed to say.

However, it wasn’t the president. The representative had a friend create the deepfake as an example of how easy it is to make stunningly real content.

"I thought it would be great to share that during the hearing, just to demonstrate how easy it was to do and how it could be put together so quickly and accurately," she said.

Political deepfakes in real life

Earlier this year, Anthony Hudson, a candidate running for Congress, caught some heat when he posted a video that featured an endorsement from Martin Luther King Jr. Hudson shared the bogus endorsement to TikTok and X before later removing the post.

Though it’s clear the endorsement was fake because King is not alive, it exemplifies what is possible with the technology.

"My concern is that people would see or hear of AI-generated content, and it would be so indistinguishable from real content that people might be swayed one way or the other for or against a particular candidate or issue, and they might cast their vote based on what they saw," Tsernoglou said. "But if what they saw or heard wasn't real, then they're being misled."

While the new bills focus on computer-generated or manipulated ads, television advertisements aren’t the only place where this content is found.

A.I. presents dangers to democracy, experts say

The 2024 election could be the next great test for how fast misinformation and disinformation spreads, as well as how voters combat its spread.

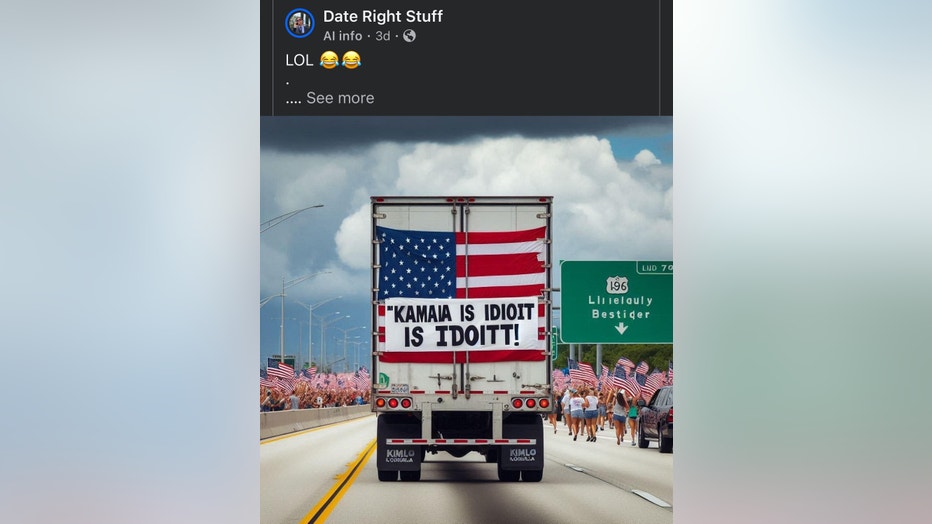

Whether it’s a photo of a politician wearing something strange or a photo showcasing something that a child allegedly made, AI-generated images seem to be common to see when scrolling through social media.

Misinformation isn’t new, but combine it with computer-generated photos or videos, and it is much more difficult to discern fact from fiction.

"Misinformation has been around since like the beginning of time. People have been lying to other people for personal gain or other gains. With AI, it's very easy to do," said AI expert Joe Tavares.

Tavares works in technology and has been involved with artifical intelligence since the 1990s, when he worked with speech recognition software Dragon NaturallySpeaking. In that time, he has watched the technology evolve to what it is today.

"You don’t need a wide array of skills to do this," he said, referring to generating content with AI.

Social media’s AI misinformation policies

Meta, X, and TikTok all have policies in place designed to limit the spread of misinformation on the apps and keep users informed about potentially misleading content, such as AI-generated videos and photos.

(Photo: Facebook)

These policies are long and often overlap with other usage policies, and like technology, are constantly evolving. For instance, Meta’s most recent misinformation policy ends with a note that some information may be slightly dated, while TikTok’s policy mentions ongoing work to improve the platform.

All three companies label AI-generated content to some extent.

On X, media may be removed or labeled by the website if the company has "reason to believe that media are significantly and deceptively altered, manipulated, or fabricated." This includes using AI to create media.

X uses technology to review media, and the site also has the option to report posts for review.

On Meta platforms, including Facebook and Instagram, a label may be added alerting users of manipulated content if it is "digitally created or altered content that creates a particularly high risk of misleading people on a matter of public importance."

TikTok’s AI transparency policy says that AI-generated content uploaded from certain platforms is labeled. Users also have the option to add an AI label to content that was digitally generated or altered.

However, due to the complexity of these computer-generated images and videos, they can sometimes slip through the technology that is scanning them.

"The techniques that are used essentially with generating an image, you're generating a bunch of noise and then looping through it over and over again, slowly sifting the noise out until you see what you prompted," Tavares said. "Because of that, it's going to be really difficult to tell whether something was generated by a camera on a phone versus a computer that's running a model."

Social media challenges

While social media companies are working to get a handle on content being posted and shared, both fake news and AI present unique challenges for these websites and apps.

Meta’s misinformation policy sums up the issue of vetting content simply: "A policy that simply prohibits ‘misinformation’ would not provide useful notice to people who use our services would be unenforceable, as we don’t have perfect access to misinformation."

Combating misinformation becomes even more complicated when it involves AI-generated content.

(Photo: Facebook)

Resources are also a problem when it comes to stopping AI online.

"Facebook has a lot of resources, but not infinite," Tavares said. "So, the adversaries are not necessarily under the same constraints. You have a nation state like Russia or China just flooding unlimited funds into this and taking all of their smartest people and putting them at this problem."

This growing problem comes at a crucial time. With an election just around the corner, misinformation can be particularly damaging. For instance, after hurricanes devastated the south recently, fake images and information began spreading on social media, showing damage that wasn’t real and claiming the federal government wasn’t assisting those impacted.

(Photo: Facebook)

According to the Institute for Strategic Dialogue (ISD), this misinformation mainly took aim at the Federal Emergency Management Agency (FEMA) and President Joe Biden’s administration, including Vice President Harris.

ISD said a study showed that Russian state media, social media accounts, and websites spread false information about hurricane cleanup efforts, with an apparent goal of making U.S. leadership look corrupt. That misinformation campaign included AI-generated photos of damage that never happened, including flooding at Disney World.

Melanie Smith, the director of research at ISD, said these foreign entities are using misinformation and AI to exploit issues that already exist in the U.S. as the presidential election nears.

"These are not situations that foreign actors are creating," Smith told AP. "They’re simply pouring gasoline on fires that already exist."

And that metaphorical gasoline and fires can have dire consequences when people believe what they are seeing and share it.

"That's my biggest concern, because how voting is, it's your way of expressing your voice, and you don't want your vote to be affected by misinformation," Tsernoglou said.

Then, there is the issue of AI-generated content that doesn’t contain misinformation or isn’t made to mislead.

Tavares noted how AI-generated photos are so new, that some, such as Trump in a bikini, may just be people playing around with the technology to see what it can create. However, that comes with its own set of problems as it overwhelms timelines with these fake images that can distract from real news and issues.

"A lot of people consider propaganda to be trying to change your mind or make you think a certain way, but that's not necessarily always the goal. Sometimes the goal is to just make so much noise that nobody has anything to discuss," Tavares said. " I think that's probably part of a strategy to pollute the discussion and the dialogue around ideas."

Curbing AI misinformation

Unfortunately, stopping the spread of AI-generated content isn’t as simple as social media companies putting policies in place to regulate them.

"There is no for-profit company that's going to be able to go toe to toe with that just because of the nature of it," Tavares said. "I don't know that there is a solution to the problem."

Tavares says that education is going to be the best defense against AI misinformation.

"I think educating the public and really fundamentally understanding the motivations behind that is the only way that we're going to be able to really fight this," he said. "It's kind of like a social problem more than it is like, ‘Why aren't these companies doing anything?’"

Tavares said that spotting AI right now isn’t very difficult if you take a moment to examine a photo.

(Photo: Facebook)

"Right now it's pretty easy," he said. "It looks like it's studio lit, slightly animated. The hair is like brushed on. Maybe there's a couple extra fingers. The teeth aren’t how teeth are supposed to look."

However, as the technology advances, spotting these telltale signs probably won’t be so straightforward, Tavares said.

(Photo: Facebook)

"It's hard to say what will make it easy to spot in the future," he said. "I suspect that people who are, again, familiar with vetting stories and somebody who works at a news organization is going to be able to pick that out pretty quick. But the average person, I can say that I'm pretty concerned."

Tavares said slowing down and verifying what you are seeing is going to be crucial for preventing the spread of fake images and videos.

"Society as a whole needs to recognize that this is a thing and start to kind of pull back a little bit on their reactionary tendencies towards responding to things that haven't necessarily been vetted," he said. "It's on everybody individually to be able to recognize this or at least recognize that maybe it's not real, and they shouldn't share it until they've been able to confirm that in some way."

Tavares noted that AI images are often appealing to people's emotions, and tapping into emotions helps these fake photos spread.

"People aren't necessarily aware of the motivations behind it. They just see, like, a girl that's been abandoned by her family in Hurricane Helene and they're not assuming anything else about it," he said. "They're like, 'This kid looks like they're in trouble. We should do something.' And then they share that post and other people see it."

Tavares’ overall advice? Breathe and step back before immediately reacting to what you see online, especially when the images are specifically designed to prey on emotions.

"If you're feeling angry or upset because of something you saw on the Internet, maybe you step away from those feelings for a minute and really analyze why you're feeling that way and then dig a little bit deeper into corroborating what you saw with the reality on the ground," he said.

The Source: Information in this article is from Michigan Rep. Penelope Tsernoglou, AI expert Joe Tavares, and the ISD, via the Associated Press.